LIFE IN A DIGITAL WORLD by Martha Buskirk

What happens in the intersections between our physical and virtual lives? Starting with the phones in our pockets, almost all of us have willingly traded away some degree of privacy for convenience. It only gets worse from there, as “smart” devices of all kinds work their way into daily use. In addition to privacy concerns, everyday sharing of found or modified content puts us potentially at odds with an incessant ownership culture based on copyright’s pervasive reach.

As activities shifted online during the pandemic, individuals and organizations increasingly felt the impact of algorithms that decide in an instant whether our expressions are copyright infringement. Some of the problems have to do with the layering of rights, including performance recordings that are protected even when the music compositions being played are part of the public domain. Classical musicians attempting to replace live concerts with streaming have been cut off mid-movement if their renditions align too closely with the digital signature of previous recordings. The Takedown Hall of Shame , maintained by the Electronic Frontier Foundation (EFF), also notes multiple copyright claims against an extended video of “original” white noise.

Algorithms are at their worst where quotations of copyrighted material should be protected by fair use exceptions. The stage was set in 2007, when Stephanie Lenz uploaded a video of her toddler dancing, with Prince’s “Let’s Go Crazy” playing in the background, to YouTube (eons ago, in internet time, since YouTube was then only two years old itself). When she was hit with a takedown notice from Universal, the EFF rose to her defense with a lawsuit that dragged on for more than a decade before being resolved definitively in Lenz’s favor on fair use grounds. In the interim, automation has made the problems more all-encompassing.

On the eve of the pandemic, New York University School of Law held an in-person copyright panel that explored how to judge when one music composition is “substantially similar,” in legal terms, to another. Not surprisingly, clips of songs were played, and when the organizers uploaded video documentation, it was flagged by YouTube’s content ID. They quickly discovered what most young people already know: YouTube does have a mechanism for attempting to counter such notices, but if you challenge a claim and lose, you are subject to a copyright strike that can result in suspension or termination of your account. Despite the assembled expertise, the organizers weren’t sure whether multiple notices against a single video might result in a series of strikes that could immediately do away with the School of Law’s entire video presence. They were able to use back-channel communications to get the problem resolved, but few are so lucky.

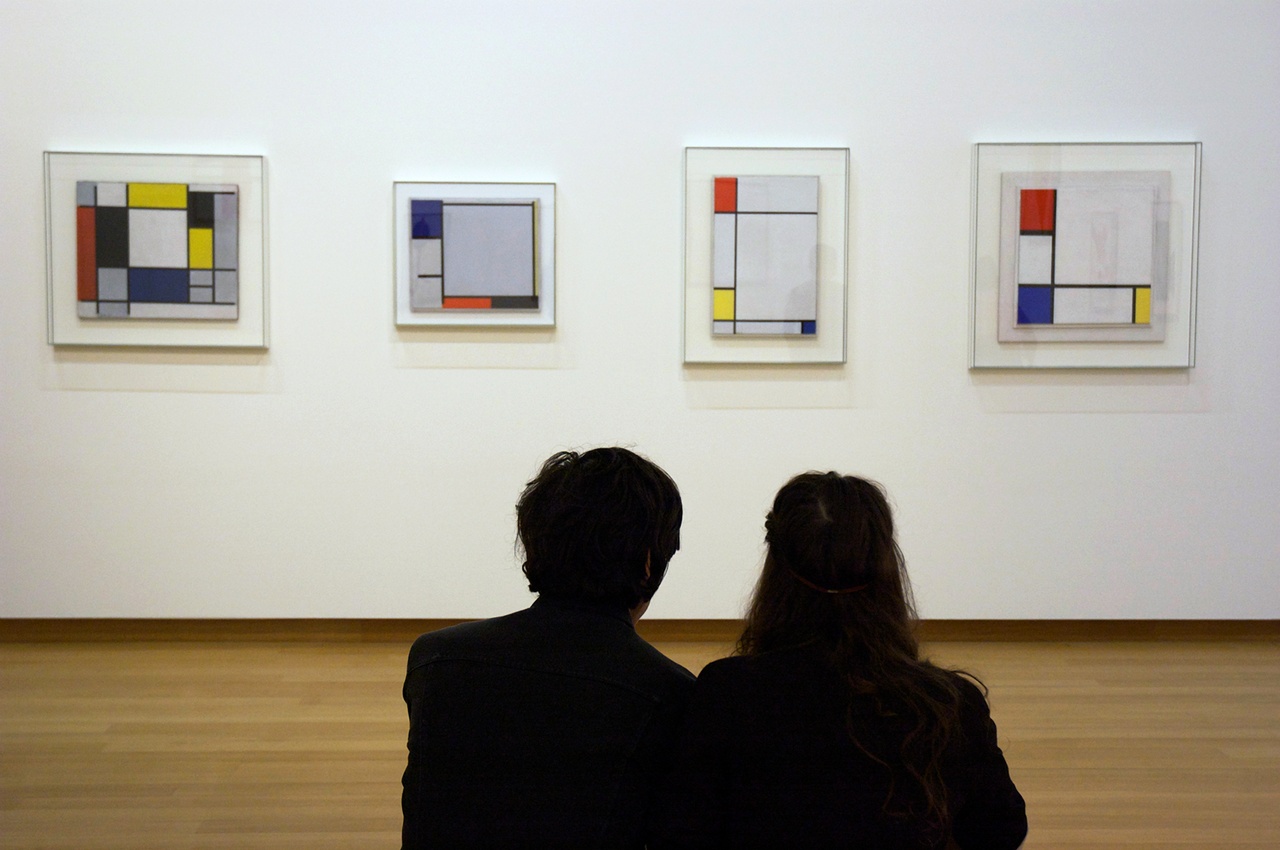

Liselot van der Heijden, “Untitled, Stedelijk I,” 2011

There’s also the matter of weaponizing copyright . Some police officers seem to have hit upon a strategy of playing music to prevent their interactions with the public from being filmed, in an apparent attempt to trigger automatic filters that block livestreaming as well as uploads. But strategic copyright deployment has also been used to fill gaps in protection, including certain privacy rights. In the United States, individuals attempting to get nude images that have been shared without their consent taken down may have better luck filing a copyright claim (governed by federal law) than relying on the inconsistent patchwork of state privacy provisions.

Given how far the US lags behind Germany and many other countries in terms of data protection, it’s fortunate that there’s the 2008 Biometric Information Privacy Act in Illinois, which makes it illegal to use personal biometric data including iris scans, fingerprints, voiceprints, and face scans without consent. Facial recognition strategies developed by social media companies have prompted various Illinois lawsuits , and the revelations about a company called Clearview AI have been especially alarming : it has scraped social networks and other sources (often in apparent violation of user agreements) to amass a huge database of human faces that allows real-time identification of people in public spaces. Among the users of its services have been law enforcement agencies, including some in places where such practices are forbidden by local regulations. Based on information revealed by lawsuits against Clearview in Illinois and California, legislators elsewhere have been rushing to enact their own protections.

Of course any use of social media platforms requires a devil’s bargain – since taking advantage of their free services comes at the cost of handing over volumes of personal data. In the US, increasing agitation against section 230 of the Communications Decency Act passed in 1996 has been framed as striking back against the platforms that dominate our communications. Yet efforts to rein in the power of these corporations have to be balanced against dangers associated with undermining “safe harbor” provisions that protect internet platforms from liability for material uploaded by users. The emphasis on licensing in Article 17 (originally Article 13) of the Copyright Directive enacted by the EU Parliament in 2019 has prompted similar concerns about its potential to curtail circulation of online information due to preemptive filtering and blocking.

The perils of overly aggressive content filtering for cultural criticism or pedagogy should be obvious. But there are other sorts of dangers associated with the ways that legal frameworks differ from one country to the next, in the context of a world where online exchange is frequently accompanied by movement of physical objects. The Jeff Koons retrospective at the Centre Pompidou may have been largely the same exhibition that debuted at the Whitney in 2014, but it provoked a whole new volley of copyright lawsuits over sculptures from Koons’s 1988 Banality series. Despite the fact that his copyright fortunes in the US were on the upswing, Koons did not fare well in the French courts, and his efforts to appeal resulted in even higher penalties, including fines for reproducing the work in question on his own website.

Even in the US, where fair use has been part of the copyright code since 1976, outcomes have been decidedly mixed – and a recent case involving Andy Warhol might suggest that the Court of Appeals for the Second Circuit gets a kick out of overturning district court fair use decisions. In 2013, it declared the majority of Richard Prince’s riffs on Patrick Cariou’s Rastafarian photos transformative, reversing a lower court finding of infringement. Different Prince, different ruling, however, as the Warhol Foundation recently experienced in relation to a photo of the musician who for a time replaced his name with a symbol to protest record label control over his output. A district court found fair use in a series of silk-screen images of Prince that Warhol based on a photograph by Lynn Goldsmith, but not-so-fast said the appeals court, rather paradoxically ruling that Warhol’s works were not “transformative” even though they “display the distinct aesthetic sensibility that many would immediately associate with Warhol’s signature style.”

Fair use advocacy for artists interacting with preexisting images needs to be accompanied by equal support for criticism and commentary – a proposition made that much more pressing by the pandemic-induced rush to online delivery (and anyone trying to talk about cultural objects should certainly be wary about signing agreements that put all the risks on speakers rather than hosting institutions). There are many other issues to consider as in-person and online existences become increasingly interwoven.

Screenshot, “AEG Turbine Factory,” Google Maps

For me, one upshot of online pandemic teaching was the absence of fun field trips to break up the monotony (for which I am now deeply nostalgic) of sitting around talking in a classroom, and I made various attempts to substitute these trips with virtual experiences. As we used Google Street View to traverse Berlichingenstraße along the Peter Behrens AEG Turbine Factory building in Berlin, I was struck not just by the opportunity to see atypical aspects of this much-reproduced landmark, but also by something unrelated – the blurring of one of the buildings on the other side of the street. Even though Germany has more expansive freedom of panorama rights than the US, stronger privacy provisions mean that Google is required to give residents the ability to opt out of its pervasive visual record, accomplished through selective blurring.

The image by Liselot van der Heijden reproduced on the cover of my recent book, Is It Ours? Art, Copyright, and Public Interest , reflects a logical extrapolation. If filters can stop us from uploading material – including content that should be protected by fair use – how long until we are prevented from the very act of recording? And to the extent that we become reliant on digital rather than analog devices to aid seeing and hearing, is it possible that we might be preemptively blocked from perceiving in the first place?

Martha Buskirk is a Professor of Art History and Criticism at Montserrat College of Art and author, most recently, of Is It Ours? Art, Copyright, and Public Interest (University of California Press, 2021).

Image credit: © Liselot van der Heijden. Courtesy the artist.